Six times Facebook ignored their own community standards when removing content

[vc_row][vc_column][vc_column_text]

Facebook has received much criticism recently around the removal of content and its lack of transparency as to the reasons why. Although it maintains their right as a private company to remove content that violates community guidelines, many critics claim this disproportionately targets marginalised people and groups. A report by ProPublica in June 2017 found that Facebook’s secret censorship policies “tend to favour elites and governments over grassroots activists and racial minorities”.

The company claims in their community standards that they don’t censor posts that are newsworthy or raise awareness, but this clearly isn’t always the case.

The Rohingya people

Most recently, almost a year after the human rights groups’ letter, Facebook has continuously censored content related to the Rohingya people, a stateless minority who mostly reside in Burma. Rohingya have repeatedly been banned from Facebook for posting about atrocities committed against them. The story resurfaced amid claims that Rohingya people will be offered sterilisation in refugee camps.

Refugees have used Facebook as a tool to document the accounts of ethnic cleansing against their communities in refugee camps and Burma’s conflict zone, the Rakhine State. These areas range from difficult to impossible to be reached by reporters, making first-hand accounts so important.

Rohingya activists told the Daily Beast that their accounts are frequently taken down or suspended when they post about their persecution by the Burmese military.

Dakota Access Pipeline protesters

In September 2016 Facebook admitted removing a live video posted by anti-Dakota Access Pipeline activists in the USA. The video showed police arresting around two dozen protesters, although after the link was shared access was denied to viewers.

Facebook blamed their automated spam filter for censoring the video, a feature that is often criticised for being vague and secretive.

Palestinian journalists

In the same month as the Dakota Access Pipeline video, Facebook suspended the accounts of editors from two Palestinian news publications based in the occupied West Bank without providing a reason. There are no reports of the journalists violating the networking site’s community standards, but the editors allege their pages may have been censored because of a recent agreement between the US social media giant and the Israeli government aimed at tackling posts inciting violence.

Facebook later released a statement which stated: “Our team processes millions of reports each week, and we sometimes get things wrong.”

US police brutality

In July 2016 a Facebook live video was censored for showing the aftermath of a black man shot by US police in his car. Philando Castile was asked to provide his license and registration but was shot when attempting to do so, according to Lavish Reynolds, Castile’s girlfriend who posted the video.

The video does not appear to violate Facebook’s community standards. According to these rules, videos depicting violence should only be removed if they are “shared for sadistic pleasure or to celebrate or glorify violence”.

“Facebook has long been a place where people share their experiences and raise awareness about important issues,” the policy states. “Sometimes, those experiences and issues involve violence and graphic images of public interest or concern, such as human rights abuses or acts of terrorism.”

Reynold’s video was to condemn wrongful violence and therefore was appropriate to be shown on the website.

Facebook blamed the removal of the video on a glitch.

Swedish breast cancer awareness video

In October 2016, Facebook removed a Swedish breast cancer awareness campaign that had depictions of cartoon breasts. The breasts were abstract circles in different shades of pinks. The purpose of the video was to raise awareness and to educate, meaning that by Facebook’s standards, it should not have been censored.

The video was reposted and Facebook apologised, claiming once again that the removal was a mistake.

The Autumn issue of Index on Censorship magazine explored the censorship of the female nipple, which occurs offline and on in many areas around the world. In October 2017 a Facebook post by Index’s Hannah Machlin on the censoring of female nipples was removed for violating community standards.

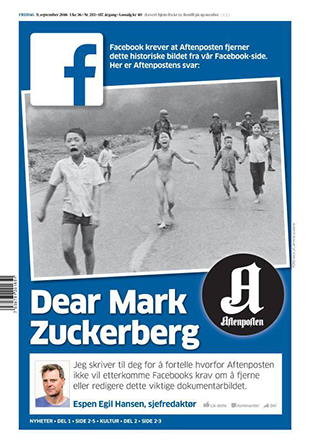

“Napalm girl” Vietnam War photo

A month earlier, in a serious blow to media freedom, Facebook removed an iconic photo from the Vietnam War. The photo is widespread and famous for revealing the atrocities of the war, especially on innocent people like children.

A month earlier, in a serious blow to media freedom, Facebook removed an iconic photo from the Vietnam War. The photo is widespread and famous for revealing the atrocities of the war, especially on innocent people like children.

In a statement made following the removal of the photograph, Index on Censorship said: “Facebook should be a platform for … open debate, including the viewing of images and stories that some people may find offensive, is vital for democracy. Platforms such as Facebook can play an essential role in ensuring this.”

The newspaper whose post was censored posted a front-page open letter to Mark Zuckerberg stating that the CEO was abusing his power. After public outrage and the open letter, Facebook released a statement claiming they are “always looking to improve our policies to make sure they both promote free expression and keep our community safe”.

Facebook’s community standards claim they remove photos of sexual assault against minors but don’t mention historical photos or those which do not contain sexual assault.

The young woman shown in the photo, who now lives in Canada, released her own statement saying: “I’m saddened by those who would focus on the nudity in the historic picture rather than the powerful message it conveys. I fully support the documentary image taken by Nick Ut as a moment of truth that capture the horror of war and its effects on innocent victims.”[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column][vc_basic_grid post_type=”post” max_items=”4″ element_width=”6″ grid_id=”vc_gid:1509981254255-452e74e2-3762-2″ taxonomies=”1721″][/vc_column][/vc_row]