David Cameron has announced plans to block access to pornography online, with providers offering the choice to turn on a filter.

David Cameron has announced plans to block access to pornography online, with providers offering the choice to turn on a filter.

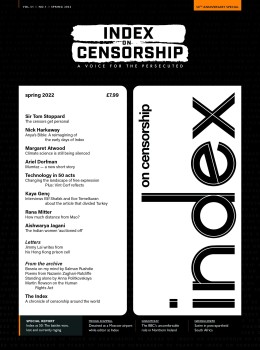

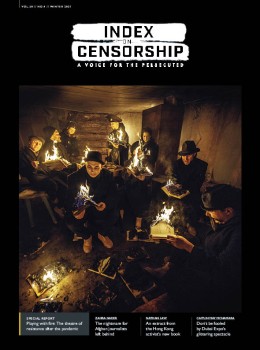

In a 2009 edition of Index on Censorship magazine Seth Finkelstein examines how indiscriminate blocking systems can be a source of censorship

Obscenity online is posing some of the greatest challenges to free speech advocates and censors. Seth Finkelstein explains why

When people talk of a topic such as obscenity, they almost always treat it as an intrinsic property, as if something either is, or isn’t, obscene (just as a woman is or isn’t pregnant). But in fact, in the US, definitions vary from state to state – enshrined in law as “community standards” — which means that obscenity is a geographic variable, not a constant. Something cannot be legally adjudicated obscene for all the world, but only within a particular community. And standards can vary widely between, say, cities such as New York or San Francisco, versus Cincinnati or Memphis.

This has profound implications for obscenity on the Internet and for censorship.

In the case of Nitke v. Ashcroft, in which I served as an expert witness, a court tried to grapple with these difficulties and found them daunting. In 2001, Barbara Nitke, an American photographer known for her erotic portraits of the BDSM [bondage, domination, sadomasochism] community, filed a lawsuit challenging the constitutionality of the Communications Decency Act — a federal statute that prohibits obscenity online.

Nitke argued that the Internet does not allow speech to be restricted by location. Yet anyone posting explicit material risks prosecution according to the standards of the most censorious state in the country. Nitke claimed that this violated her First Amendment rights. She lost the case in 2005: the court ruled that she had presented insufficient evidence to convince the judges of her argument.

The question of definitions is also fundamental to government attempts to censor obscene material online. The most popular method of attempting regulation of obscenity is secret blacklists in the shape of “censorware”, often relying heavily on purely algorithmic determinations.

Censorware is software that is designed and optimised for use by an authority to prevent another person from sending or receiving information.

This fundamental issue of control is the basis for a profound free speech struggle that will help define the future shape of worldwide communications, as battles over censorship and the Internet continue to be fought.

The most common censorware programmes are huge secret blacklists of prohibited sites, and a relatively simple engine that compares sites attempting to be viewed with some of the blacklists. There are some more exotic systems, but they have many flaws and are beyond the scope of this article (though I’ll note that software that claims to detect “flesh tones” typically has a very restrictive view of humanity). While blacklists related to sexual material garner the lion’s share of attention, it’s possible to have dozens of different blacklists. For example, “hate speech” is another contentious category.

Note I do not use the word “filter”. I believe once you concede the rhetorical framework of “filters” and “filtering”, you have already lost. This is not a matter of mere partisan politics. Rather, there’s an important difference in how the words used may channel thought about the issue. To talk of a “filter” conjures up a mental image of ugly, horrible, toxic material that is being removed, leaving a clean and purified result — eg a coffee filter or a dirt filter. One just wants to throw the ugly stuff away. Now consider if we have wide-ranging disagreements on the differences between what is socially valuable erotica, tawdry but legal pornography, and illegal obscenity – how could a computer programme ever make such artistic distinctions?

Crucially, censorware blacklists do not ordinarily encompass legal matters such as community standards or even the criterion “socially redeeming value”. They do not take into account geographic variation at the level of legal jurisdictions. There is no due process, no advocacy for the accused, no adversary system. Everything is done in secret. Indeed, examining the lists themselves is near impossible, since they are frequently hidden and/or encrypted.

In 1995, I was the first person to decrypt these blacklists, and found they were not just targeting commercial sex sites, but some had also blacklisted feminism, gay rights, sex education and so on. While this was a revelation in general, especially to various interested parties who were touting censorware as a saviour in complicated politics, I was not personally surprised. I viewed it as an inevitable historical outcome when censorminded people are given free reign to act without accountability.

I was eventually forced to abandon the decryption research due to lack of support and rising legal risk. But the lessons of what I, and later others, discovered, have a direct bearing on current debates surrounding national censorware systems. Although ordinarily promoted as covering only obscenity and other illegal material, without checks and balances there can be no assurance against mission creep or political abuse. When there’s no ability to examine decisions, the relatively narrow concept of formal obscenity can become an expansive justification for wide-ranging suppression.

There is much more of an interest in sex than in rebelling against dictatorships

Blocking material that is considered obscene also has wider repercussions for free speech – and its regulation. If a control system can actually prevent teenagers in the West from getting sex-related content, it will also work against citizens in China who want to read political dissent. And inversely, if political dissenters can escape the constraints of dictatorial regimes, teenagers will be able to flout societal and parental prohibitions. It’s worth observing that those who argue that the Chinese freedom-fighters are morally right, while teenagers interested in sex are morally wrong, are not addressing the central architectural questions.

There is, in fact, much more of an interest in sex than in rebelling against dictatorships. So it’s conceivable that there could be a worst of both worlds result, where authoritarian governments could have some success in restricting their citizens’ access to information, but attempts to exclude masses of sexual information are ultimately futile.

Furthermore, there is an entire class of websites dedicated to preserving privacy and anonymity of reading, by encrypting and obscuring communications. These serve a variety of interests, from readers who want to leave as little trail as possible of their sexual interests, to dissidents not wanting to be observed seeking out unofficial news sources. The many attempts by dictatorial regimes to censor their populations have spurred much interest in censorware circumvention systems, especially among technically minded activists interested in aiding democratic reformers. Sometimes, to the chagrin of those working for high-minded political goals, the major interest of users of these systems is pornography, not politics. But that only underscores how social issues are distinct from the technical problem.

Recall again the importance of how the debate is framed. If the question is “Resolved: Purifiers should be used to remove bad material”, then civil libertarians are already at a profound disadvantage. But if instead the argument is more along the lines of “Resolved: Privacy, anonymity, even language translation sites, must not be allowed, due to their potential usage to escape prohibitions on forbidden materials”, then that might be much more favourable territory for a free speech advocate to make a case.

In examining this problem, it’s important not to get overly bogged down in a philosophical dispute I call the “control rights” theory versus the “toxic material” theory. Many policy analysts concern themselves with working out who has the right to control whom, and in what context. The focus is on the relationship between the would-be authority and the subject. In contrast, a certain strain of moralist considers forbidden fruit akin to a poisonous substance, consumption of which will deprave and corrupt. It is the information itself that is considered harmful.

Adherents of these two competing theories often talk past one another. Worse, “control rights” followers sometimes tell “toxic material” believers that the latter should be satisfied with solutions the former deem proper (e.g. using censorware only in the home), which fails to grasp the reasoning behind the divide of the two approaches.

This article originally appeared in Index on Censorship magazine’s issue on obscenity, “I Know it When I See it” (Volume 38, issue 1 2009). Click here to subscribe to Index on Censorship